mindmap

root((Machine Learning))

Supervised Learning

Regression

Simple Linear Regression

Multiple Regression

Polynomial Regression

Support Vector Regression

Random Forest Regression

Classification

Logistic Regression

Decision Tree

Random Forest

SVM

Neural Networks

Naive Bayes

k-Nearest Neighbors

Unsupervised Learning

Clustering

K-means

Hierarchical Clustering

DBSCAN

Association Rule

Apriori / FP-Growth

Dimensionality Reduction

PCA

t-SNE

Autoencoders

Reinforcement Learning

Q-Learning

Deep Q-Networks

Policy Gradient Methods

Introduction to Machine Learning

International College of Digital Innovation, CMU

September 11, 2025

Does AI replace the human brain?

No, not really. AI does not “replace” the brain.

AI = specialized tool: It can do narrow tasks (image recognition, text generation, playing chess) much faster than humans.

Human brain = general intelligence: We can reason, imagine, feel emotions, and adapt across different tasks — something AI cannot fully replicate.

AI assists, not replaces: It’s more like a “smart calculator on steroids” that helps us think faster and automate repetitive or data-heavy work.

Human Progress: From Tools to AI

Ancient Times – Basic Tools

- Stone tools, fire, wheel, plow

- Helped humans hunt, farm, and travel more efficiently.

Industrial Revolution (18th–19th Century) – Machines

- Steam engine, textile machines, trains, electricity

- Reduced heavy manual labor, boosted mass production.

20th Century – Automation

- Assembly lines, cars, airplanes, household appliances

- Machines took over repetitive factory work and made life more convenient.

Late 20th Century – Computers & Internet

- Digital computers, databases, internet

- Automated calculation, communication, global information sharing.

21st Century – AI & Robotics

- Machine Learning, autonomous robots, smart assistants

- Now machines can “learn from data,” predict outcomes, and support decision-making.

- Example: Self-driving cars (replace driving labor), ChatGPT (language tasks), robots in warehouses (manual labor).

Big Picture

Tools → Machines → Computers → AI

Each step augments human ability, reducing physical or mental burden.

AI is the next leap, moving from “machines doing physical work” to “machines supporting cognitive work.”

🕰️ Timeline of AI & ML Pioneers

1950 – Alan Turing

- Publishes “Computing Machinery and Intelligence”

Turing concluded that instead of debating the abstract and philosophical question “Can machines think?”, it is more productive to reformulate the question into an operational test of intelligence. What he called the Imitation Game , now known as the

Turing Test

1956 – John McCarthy

Named the field — Artificial Intelligence.

Founded the first AI conference (Dartmouth, 1956).

Provided core tools (Lisp language) that drove AI research for decades.

- Shaped AI as a discipline with both vision and technical contributions.

1957 – Frank Rosenblatt

First neural network model with learning ability (Perceptron, 1957).

Demonstrated machine learning from data in practice.

Inspired later generations — although perceptrons had limitations (proved by Minsky & Papert in 1969), the concept

evolved into multilayer networks with backpropagation, which is the essence of modern deep learning.

1959 – Arthur Samuel

Developed Samuel’s Checkers Program

- One of the first computer programs to play a complex board game (checkers).

- Implemented on early IBM computers.

First Self-Learning Program

Used machine learning to improve performance over time.

Applied rote learning (storing past experiences) and generalization (improving future play from prior games).

Coined the term “Machine Learning” (1959)

Defined it as:

“The field of study that gives computers the ability to learn without being explicitly programmed.”

1980s – Geoffrey Hinton, Yann LeCun, Yoshua Bengio

Pioneers of Deep Learning

Develop backpropagation, convolutional neural networks (CNNs), and deep belief networks

Later known as the “Godfathers of Deep Learning”

1990s – Vladimir Vapnik & Alexey Chervonenkis

Develop Support Vector Machines (SVMs)

Establish theoretical foundations for statistical learning

Deep Blue

May 1997: Deep Blue defeated Garry Kasparov, the reigning World Chess Champion, in a six-game match (2 wins, 1 loss, 3 draws).

This was the first time a computer defeated a World Champion in a classical chess match under standard tournament time controls.

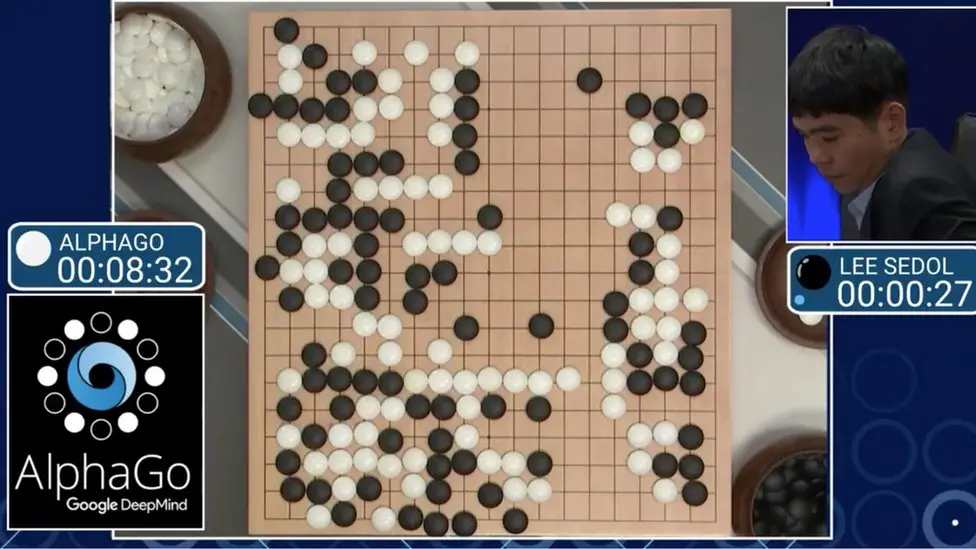

2016 – Demis Hassabis & DeepMind

Develop AlphaGo, defeating world champion Lee Sedol

Marks a major success in deep reinforcement learning

How Do Humans, Animals, and Machines Learn?

- Learn via trial & error and imitation

- Instinctive survival learning

- Fast in specific contexts

- Limited abstract reasoning

- Learn via data + algorithms

- Supervised, unsupervised, reinforcement learning

- Detect hidden patterns

- Extremely fast, but no true understanding

Summary: Learning & Work Across Humans, Animals, and Machines

Before Industrial Revolution 1.0 → Work was manual, craft-based, and knowledge passed through teaching/apprenticeship.

Humans contributed creativity, reasoning, innovation → foundation for industrial progress.

In pre-Industrial society, animals were key labor force: plowing, transportation, carrying loads.

Animals learn via instinct & imitation — useful for repetitive survival-related tasks, but limited abstraction.

With Industrial Revolution 1.0 (steam engines), reliance on animal labor declined sharply.

Industrial Revolution 1.0 (steam, mechanization): Machines replaced animal physical strength.

Industrial Revolution 2.0 (electricity, mass production): Machines scaled human productivity.

Industrial Revolution 3.0 (computers, automation): Machines replaced routine human tasks.

Industrial Revolution 4.0 (AI, IoT, robotics): Machines began to learn, adapt, and make decisions → shifting from automation to intelligence.

Industrial Revolution 5.0 (human–machine collaboration): Focus on synergy: humans provide creativity & empathy, machines provide speed & precision.

The history of the Industrial Revolutions shows a progression:

Animals powered labor (pre-IR)

Machines replaced muscle (IR 1.0–2.0)

Computers replaced repetitive thinking (IR 3.0–4.0)

AI partners with humans (IR 5.0) for creativity, efficiency, and sustainability.

Note: IR = Industrial Revolution

AI vs ML vs DL

Definition: The science of making machines “intelligent” — able to perform tasks that normally require human intelligence.

Scope: Includes symbolic logic, expert systems, search algorithms, robotics, natural language processing, and machine learning.

Key idea: AI is about creating intelligent behavior, not restricted to data-driven learning.

Definition: A field within AI that focuses on building algorithms that learn from data instead of relying only on hand-coded rules.

Why subset:

ML is one approach to achieve AI.

Not all AI is ML → e.g., early rule-based expert systems were AI but not ML.

Examples: Decision trees, Support Vector Machines (SVM), k-means clustering, regression.

Definition: A specialized branch of ML that uses artificial neural networks with many layers to automatically learn complex patterns.

Why subset:

DL is a specific technique inside ML.

All DL is ML, but not all ML is DL.

Classic ML (e.g., SVM, decision trees) does not use deep neural networks.

Examples: Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Transformers (GPT, BERT).

Summary

AI is the goal: making machines intelligent.

ML is one path to that goal: machines that learn from data.

DL is one technique within ML: learning with deep neural networks.

Machine Learning Process

Data Collection: This is the process of gathering raw data from various sources such as databases, sensors, APIs, surveys, or files. The quality and quantity of collected data strongly influence the performance of the model.

Data Preparation: In this stage, the raw data is cleaned, transformed, and organized into a suitable format for training. Tasks include handling missing values, normalizing or standardizing data, feature selection, and splitting data into training, validation, and test sets.

Model Selection: Different algorithms (e.g., Decision Trees, Logistic Regression, Neural Networks) are considered. The goal is to choose a model type that best fits the problem domain, data structure, and business requirements.

Training: The chosen model is trained using the training dataset. During training, the model learns patterns and relationships by adjusting internal parameters (such as weights in neural networks) to minimize errors.

Hyperparameter Tuning: Unlike parameters learned during training, hyperparameters are set before training (e.g., learning rate, number of layers, max depth). Tuning involves systematically adjusting these values to improve model accuracy, efficiency, and generalization.

Evaluation: The trained model is tested using validation or test datasets to measure performance. Common metrics include accuracy, precision, recall, F1-score, or RMSE, depending on the type of problem (classification or regression).

Monitoring & Maintenance: Once deployed, the model is continuously monitored to ensure it performs well with new incoming data. Maintenance may include retraining with updated data, detecting model drift, and applying patches or improvements as needed.

Machine Learning Algorithms and Models

Supervised Learning

Regression

Simple Linear Regression

Multiple Linear Regression

Classification

Logistic Regression

Decision Tree

Unsupervised Learning

Clustering

K-means

Hierachical Clustering

Association Rule

- Apriori / FP-Growth